Unreal Fest 2023 Post Mortem

Aaaand that’s a wrap! (This is the language I’m learning from my peers on the media side of the house. More on that in a bit.) It’s 3:30PM Central Time on Thursday, and Unreal Fest 2023 has officially ended. It has been a packed three days, and I’m a bit surprised to report that even an introvert like me managed to get some networking accomplished.

For those who could not make it, most of the talks are going to be posted by Epic on their Unreal Engine YouTube channel. I highly recommend people check them out. I know I will be; many of the best talks conflicted with each other on different tracks, so I’ll be watching many of these on playback instead of live myself.

There was one major takeaway I came back with after all the talks, but I’ll save that one until the end. Before that, here are some other things I learned this week.

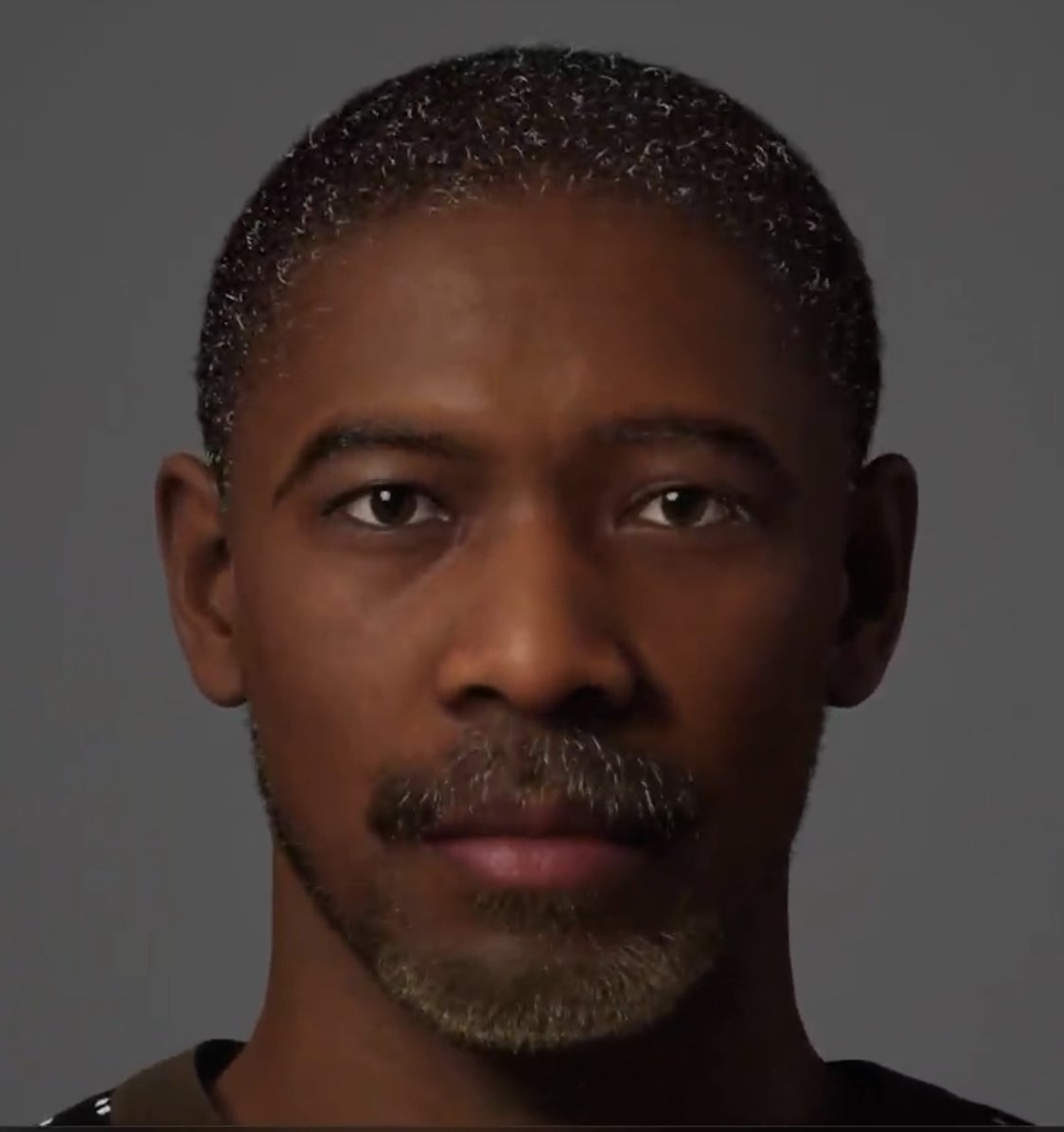

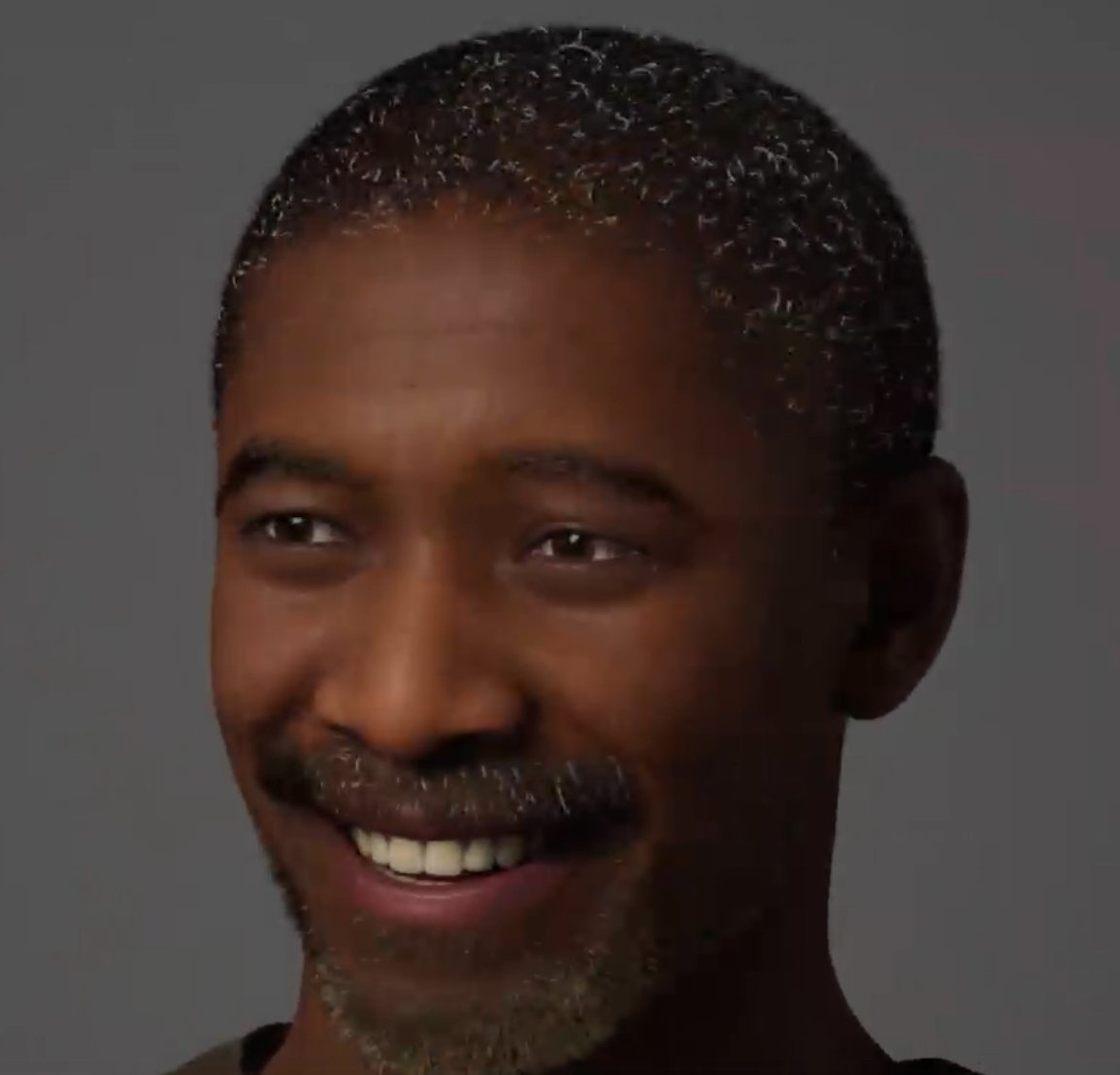

1) Metahumans continue to be amazing tech. They were in full display on the floor of the convention, with good reason. Just look at these early prototypes of some of our NPCs, running in real time:

We had started playing with Metahuman just last week and we were able to use the tool editor to craft these faces and bodies and export them to our Unreal test environment. Under the hood, there is a ton of complexity going on in there, as you can probably tell just looking at the output. While exporting Metahumans to other tools like Maya or Blender to manipulate outside the Unreal ecosystem is definitely possible, it is not easy and requires a lot of python and deep Metahuman understanding to set up. Also, boy are those a lot of polygons in that mesh. Like, a lot.

2) Unreal Engine isn’t just for gamers any more. This is old news for industry followers but I hadn’t realized until I started walking the floor, mingling with the attendees, and checking out all the talks. Turns out UE has become a widely adopted tool for commercials, animation studios, and mixed media entertainment (e.g. sports). This helps explain why photorealism is so big for Unreal right now. Many of the target users of the tool don’t need to run UE realtimes on 4 year old laptops. For these industries, if you have a 4090 graphics card rendering the realtime for a broadcast recording, you’ve got all the hardware you need (personally mine is on back order so I have to make due with my poor 4 year old laptop for now). And many of these customers want to craft photorealistic experiences, not stylized ones. These experiences are jaw-dropping beautiful, and not actually all that difficult to create in Unreal with the tools UE5 is providing and refining as we speak. You can see many incredible level demos showing up in Unreal social channels that folks are building every day now.

3) Sound tech is advancing too. It’s not as splashy as visual effects, but the sound team at Epic and the 3rd parties around the Unreal ecosystem are killing it when it comes to building and refining sound tooling. It was great to spend a couple sessions learning about the cutting edge of sound building since audio is such a crucial component to immersiveness.

4) Snoop Dogg is a pretty cool dude. Sure you knew that already, but did you know he was “imma hang out at that Fortnite conference” kind of cool?

There’s a guy interviewing Snoop and Champ but he didn’t make the shot. Sorry guy.

There was a time when the world was a song/And the song was exciting

-Fantine, Les Misérables

All in all, incredibly worthwhile, and my life is richer for the people I have met down here in New Orleans this week. I look forward to following them on their own journeys and getting their feedback as we deliver POCs of our game for testing down the line. But what was that major takeaway? It weaves together the above two points about Metahumans and the non-gaming target market for Unreal these days with our own goals around VR as a platform. Remember that 4090 graphics card to render these models? Yeah that ain’t the Meta Quest. The Meta Quest—even the 3 that just came out—is considered “mobile VR” because it is built on top of Android technology. What’s worse, for VR software to be performant, it needs to be able to render a slightly different scene on two screens, and ideally at 90+ frames per second to reduce the risk of nausea.

Long story short, as we were naïvely playing with photorealistic assets and Metahumans for the past couple weeks starting to learn UE5, we were unwittingly already blasting way past the kind of content that our target hardware would be able to support. So perhaps the most important lesson of all that I learned this week was that we need to reassess our expectations of what we can build with Unreal that would actually be playable in a Meta Quest VR headset. It turns out, we now know, that with Unreal, just because you can do a thing does not mean you should do a thing. So as we return from the Fest, we will work on redrawing some boundaries around our target levels and make sure we stay within them. Much better to learn this now than later.

Thanks for sticking with the blog post all the way to the end, and if you are new to the blog as a result of Unreal Fest, welcome! It is great to have you here. For those who couldn’t make it, if you have any questions feel free to hit me up and I’ll do my best to answer.

As ever,

Justin @ Archmagus